Survey Analysis with ChatGPT: Filtering the noise, getting more user insights

As product designers, surveying users is a core part of our process for uncovering opportunities to improve a product. But one recurring challenge is the time it takes to analyze survey data and translate it into actionable insights.

Traditionally, I would spend hours combing through responses, identifying patterns, and writing reports. Recently, I started experimenting with prompt engineering and large language models (LLMs) to semi-automate parts of this analysis.

For my current employer, we have a custom survey modal embedded for user cancellations. I revised the survey questions to eliminate double biases and improve clarity. For example, the previous survey had two separate fields pointing to music quality—skewing results toward that theme. I merged them into a single category and removed the unhelpful "Other" option. I also introduced a new category for usability and product issues, to surface recurring pain points from ProTunes One users that could otherwise go unnoticed.

Once the survey was set up, we let it run for a month (and continue collecting responses month by month). Data events are tracked in Mixpanel, then exported as a .csv file, which I feed into ChatGPT 5 for analysis.

Then ChatGPT gave these categorization, which will eventually help with analyzing the data without manually getting figures:

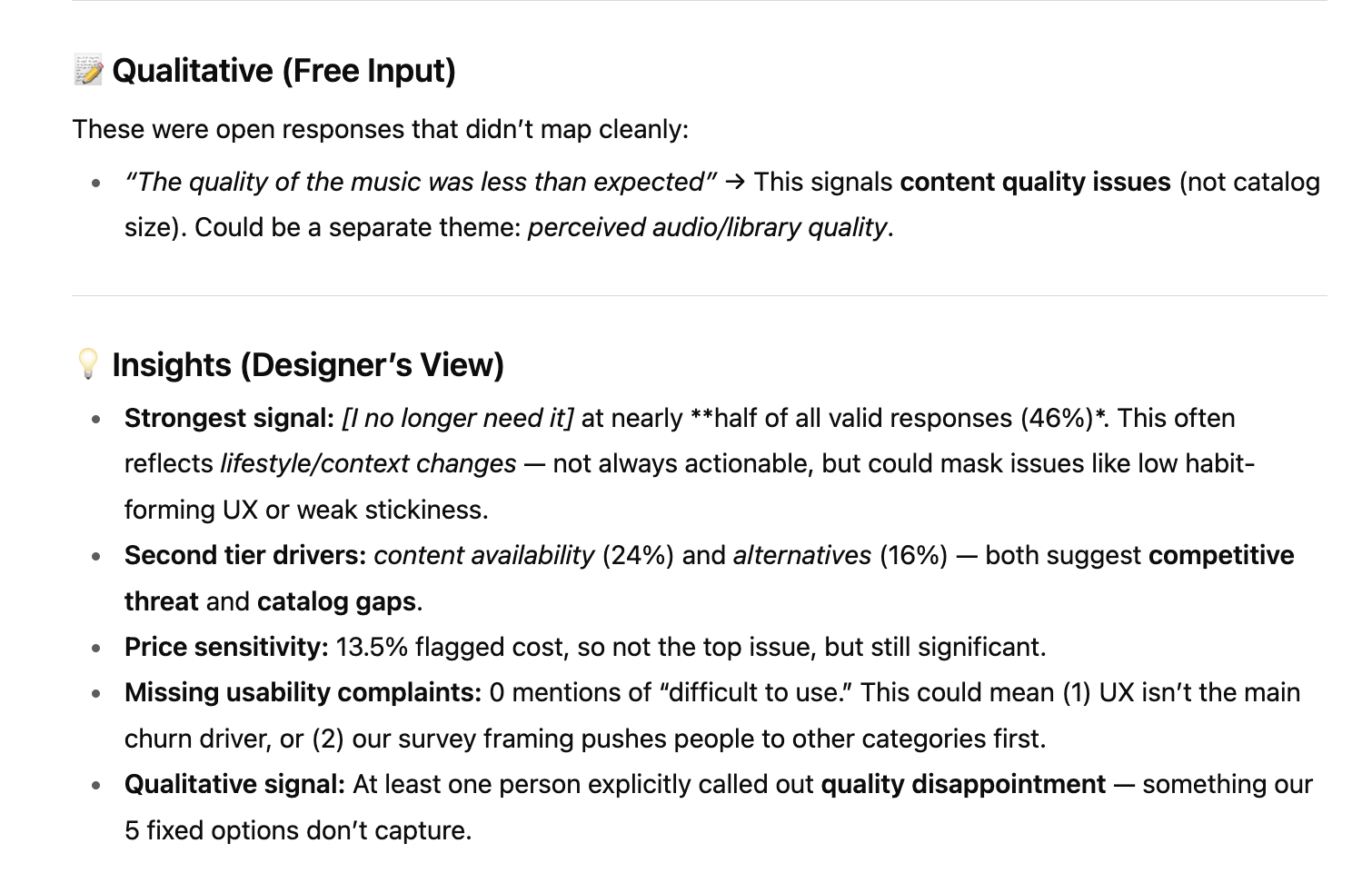

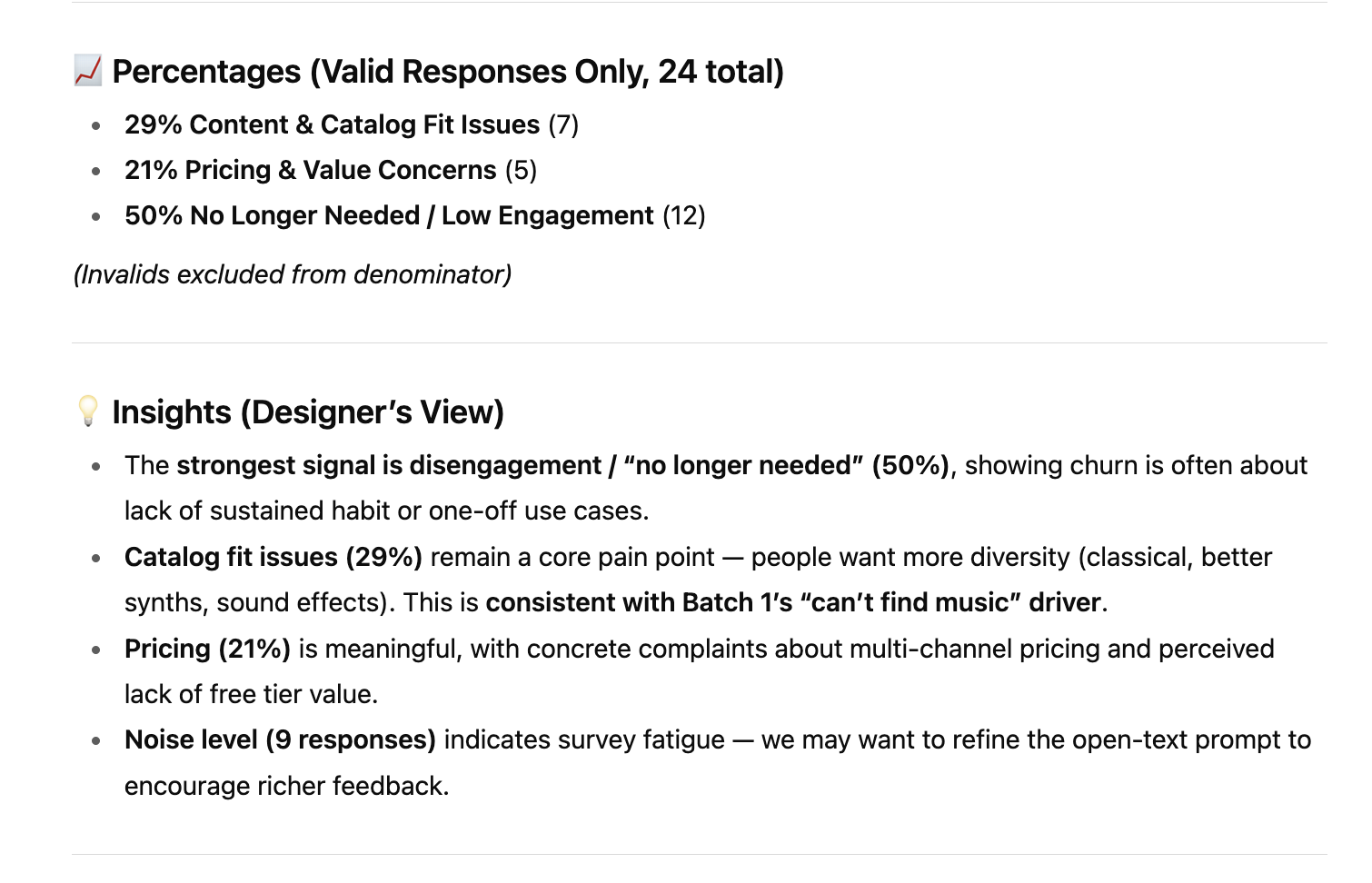

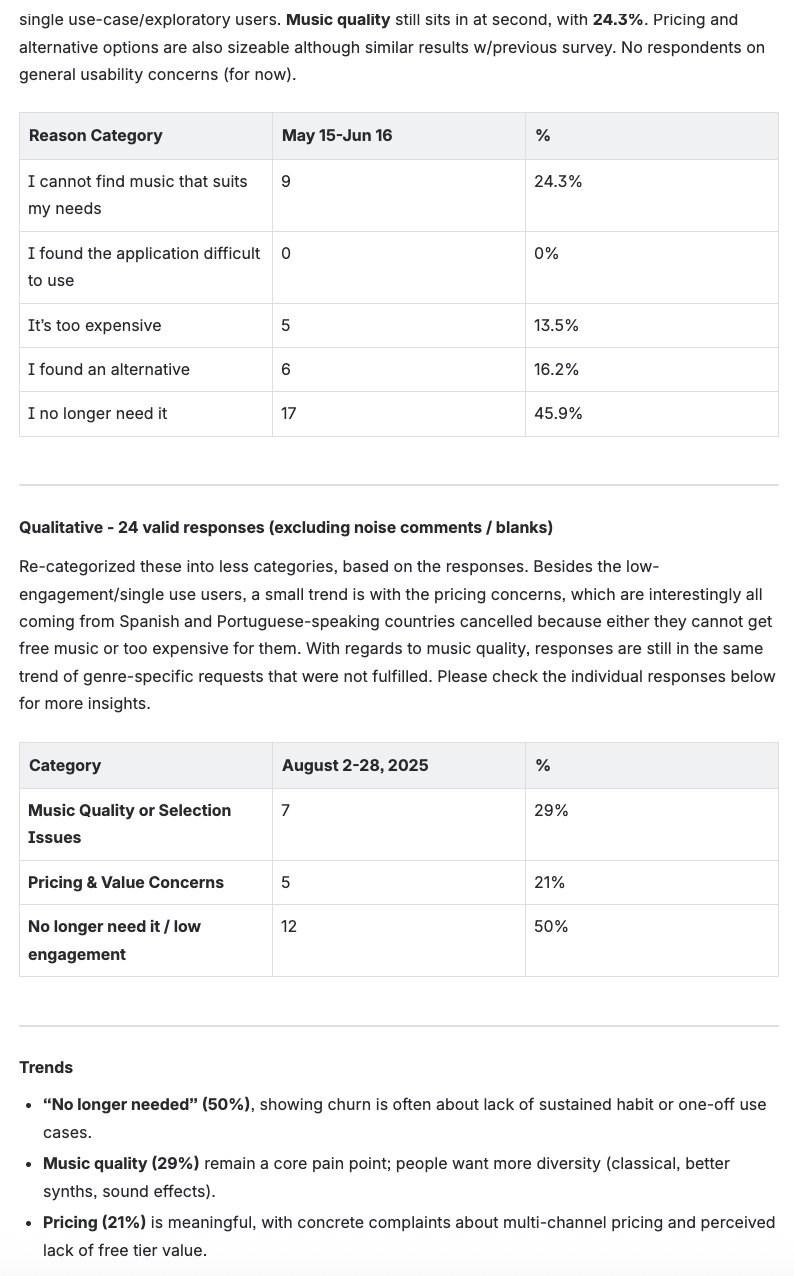

After feeding it the survey results from the .csv (separated between quantifiable/quantifiable based on the survey above, it gave these results):

In the end, it helped analyzing based on context that I'm constantly improving and can then place in a tangible Confluence report with some personal tweaks for easy reporting to stakeholders. It's a quick hack to get information right away, especially when how the survey is built is limited with analytics tools.

I started with some structured prompts to set the prerequisites for analysis. From there, ChatGPT automatically categorized the responses, creating a clear breakdown of issues and themes. After processing the raw survey data, it provided actionable insights that I could refine and format into a Confluence report, something tangible and easy to share with stakeholders.

This approach significantly reduced the manual work while still allowing me to add context and design sensitivity where needed. It’s a practical hack that speeds up reporting and helps uncover trends that might otherwise get buried in raw survey data.

Closing thought:

Leveraging LLMs like ChatGPT doesn’t replace a designer’s judgment, it amplifies it. By offloading repetitive tasks like categorization and trend-spotting, we can focus more on interpreting insights and designing solutions that actually reduce churn. The real value lies not in the automation itself, but in how it frees us to design with greater clarity and impact in our day to day work.