Finance Ops Platform MVP: A GenAI Assisted Case Study, From Ideation to Wireframing - Part 1

Sharing (from a Designer's perspective) my experience using newly-acquired GenAI skills to create a FinOps MVP prototype, from Ideation to Wireframing.

After completing four micro-courses on GenAI topics, I decided to apply my new knowledge practically by attempting to complete an MVP while thoroughly documenting my process. I aimed to explore the integration of AI tools into my workflow and assess how these new skills could influence my future projects.

My objective is to deliver in two phases, focusing on both Product and Marketing. Typically, I would prioritize branding, but for now, I'm concentrating on the product aspect to see how far I can progress.

I initially planned to spend a few hours outside of work on two main goals: developing an MVP prototype and creating a brand identity (afterwards). These 'few hours' turned into all-nighters. I aim to document this journey to showcase how I can integrate these technologies without over-relying on them in my design process, and hope this could be a jumpstart for fellow designers also trying to break into A.I.

1. Where to Begin?

To kick off the project, I brainstormed various app ideas that aligned with my personal interests and professional experience. I used physical sticky notes to jot down potential industries to explore. After evaluating my options, I decided on a B2B finance model, specifically a Finance Manager app similar to bigger industry giants like Spendex and Moss (a Berlin home-app, which I had the luck to have experienced using before I got laid off the second time).

This choice was influenced by the fact that the B2B finance market is not yet oversaturated, and I have some background knowledge in the finance sector from the B2C side (Bitwala/Nuri, Valor). On a side note: Never have I imagined to work in Finance, nor Fintech ever in my life but somehow ended up loving it and trying to find my way back at some point.

2. Setting up ChatGPT 4.1 mini to act as my "Ideation Butler", and using Hybrid prompt engineering techniques

After setting the groundwork, I defined specific parameters for GPT to generate customized responses, ideation, and mockup ideas before officially launching the project. I identified industries typically underserved by financial managers, such as SMEs with limited budgets for budgeting tools. The process began with feedback loops, which I expanded using a combination of Chain-of-Thought and Tree-of-Thought methodologies. By consistently engaging with the AI when it hallucinated or provided inconsistencies, I guided it in the right direction by validating my ideas before feeding them.

I personally prefer engaging with a slightly younger working demographic (usually around the 25-45 year old range) in projects, so I initiated a series of prompts with ChatGPT to explore value propositions, market opportunities, and fictional yet data-driven demographic profiles that target this core market before delving into user personas.

3. Ideation for Brand / Product Positioning

Based on the feedback loops I had with ChatGPT, I then carefully selected initial parameters to start generating ideas for my (yet unnamed) product:

4. User Personas

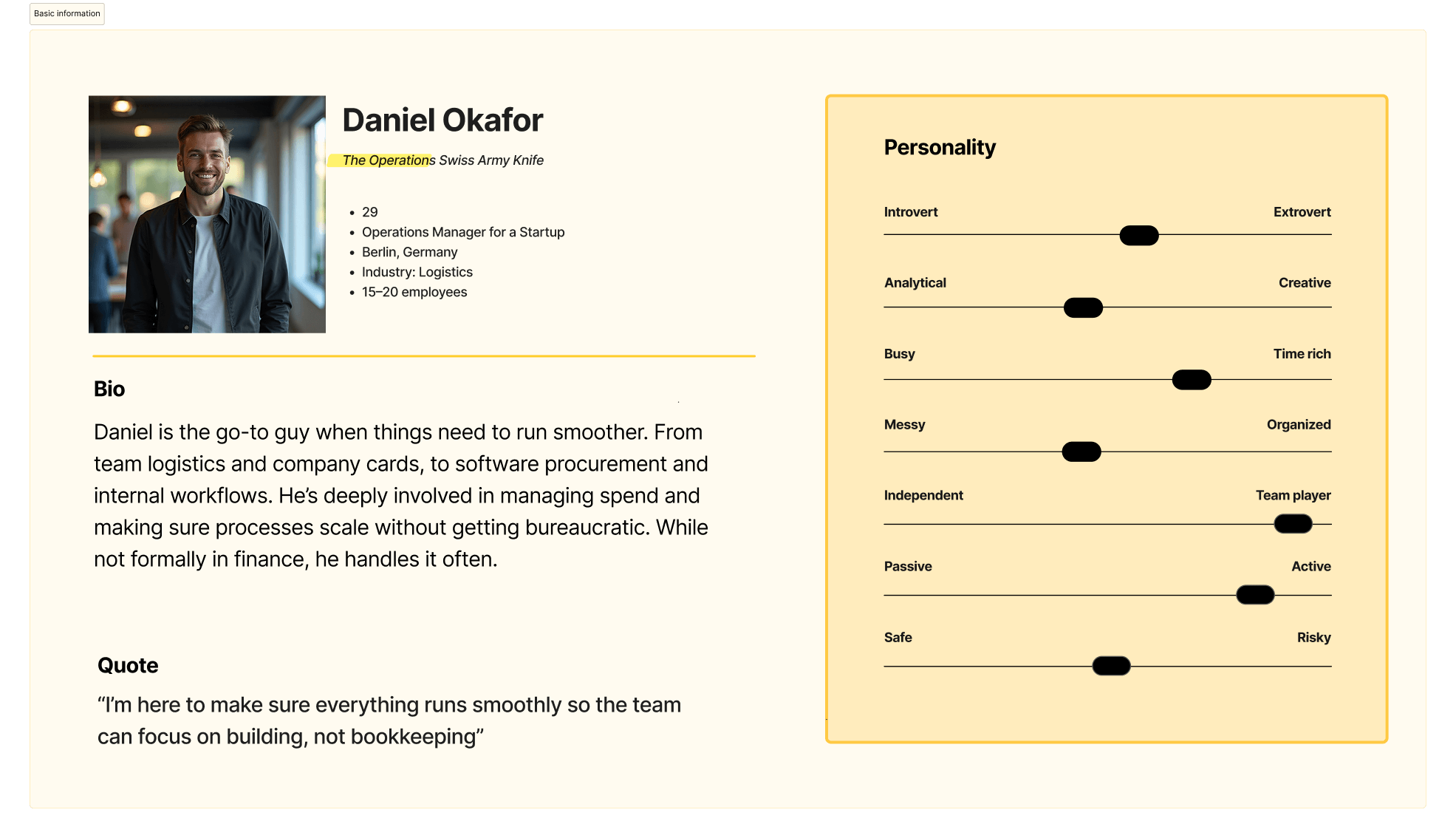

After establishing the initial positioning goals, I began using feedback loops to create initial personas. These personas, generated by ChatGPT, were based on predefined data reflecting trends and situations similar to existing companies. While these personas provide a solid starting point, they may evolve with future user interviews or changes in positioning. This approach allows us to anticipate and adapt to users' future needs within the product.

During the persona generation process, I noticed that GenAI tools occasionally struggle with organizing data, especially when managing multiple personas. While these tools excel in ideation, they often require manual adjustments to refine and perfect the output. For this exploration, I selected persona variations that closely align with the value proposition, with plans to review and adjust them as the process evolves.

I aimed for a balanced persona representation, including entry-level, HR, and C-level users. While I tried to avoid racial bias in image suggestions, the names didn't always match appearances. I refined my selections based on initial image keywords using GenAI depictions.

During the process, I made a few attempts to have a more balanced approach that covers key market points, and refined them as due fit, which slightly differs from the initial parameters based above

5. User Journey Mapping

I began user journey mapping by creating feedback loops for the three personas: Alex, Daniel, and Priya. This process involved listing potential user journeys as a starting point. Although the variety of scenarios was initially overwhelming, it was crucial to respect the process and refine as I go.

I refined the user journey to ensure each persona had distinct paths, correcting GPT's initial assumption that they all worked at the same company. Additionally, I resolved issues with ChatGPT's repetitive archetype descriptions - it usually has problems when the prompt Q&A feedback loop starts getting bulk, and you need to remind it of mistakes.

During the mapping phase, I identified key wireframe ideas and prioritized features for the MVP. For personas Daniel and Priya's, functionalities were prioritized due to their universal applicability within organizations. Alex's features up on the C-level, being more niche, might be considered in future iterations.

6. Prioritizing User Needs

To prioritize user needs before wireframing, I listed opportunities for the three personas using the MoSCoW (Must Have, Should Have, Could Have, Won't Have) framework. This helped organize and prioritize features based on the personas' needs they'd like to see on the MVP, considering the application's scope. There are a lot of other methodologies that can be used, but for purposes of this project I'm cutting it down to the essentials.

7. MVP: Going for the Must-Haves First

Going from the MoSCoW list, I have then segmented the features into what I deemed to be 'Must-Haves' in this scenario, killing some suggestions that I deemed unrealistic for an MVP, with consideration to some of the 'Should haves'. In this case, I started feeding in to GPT the must/should-haves to generate feature specs and initial flows for them. For the uncovered bases (e.g. Login/Onboarding/Settings), I also prompted them to be added to the list which ended up as follows:

Ideation for Wireframe Generation

During the initial wireframe stage, I prioritized aspects based on the MoSCoW sorting, which was half-and-half mine and GPT-assisted. While some crucial components like Onboarding, User Settings, and Account Setup were initially overlooked, the focus was primarily to address individual needs - I could have prompted this better, but it's all of the process.

Competitor Analysis: A Crucial Step in Product Development (Which I should have done earlier on in the process)

Although I initially overlooked this crucial step during the early research phase, it became clear to me that understanding competitors' approaches is important. Especially at this stage when things are starting to fall right in place. This analysis provided me firsthand insights into UX patterns and design elements that would be beneficial from now on.

By looking into the competitors, I easily spotted a few UI/X patterns for FinOps products such as:

And from the branding side:

All of these points lead to a valuable starting point to keep in mind once I get into the (presumably) very fun and productive Visual Design and Branding part of this case study.

Following the competitor analysis, I created initial wireframes to identify potential issues and refine the setup. With the framework taking shape, I used GPT to generate a checklist for the initial screens.

Then I settled with these to cover the start-of-flows and have a general outlook on how my app would look like. I sorted things out on would be deemed as 'must-haves' considering my Personas' needs, then tried to avoid the GPT noise as it was suggesting way too much. I ended up with:

At this point, I decided to switch gears and return to traditional pen-and-paper wireframing. This hands-on approach allowed me to explore ideas more freely and use my hands on something tactile. After roughing it up with my very legible handwriting, I started to feed my wireframe to check my initial ideas and scale it further.

Information Architecture (IA)

Next up is the Information Architecture. Initially I would go for just the desktop version of the app, as predominantly this will be used in the workplace. A mobile/responsive version will come thereafter, with the tools such as virtual card generation as one of its core features. From there, I instructed GPT to organize the IA based on the 'Must-Haves' from the features checklist. Was pretty nifty that it organized also the URL slug structure, but for now I focused on the high level/main structural pages to begin with on the sitemap.

Generating Wireframes and Lo-fi prototypes from hand, to an experiment with 3 different Models

First was I tried Adobe Firefly's model to see if it could get something off my initial hand-drawn wires. It ended up being was very unpolished however got some of the details e.g. tables, the prompt I gave for the sidebar etc., however in a very jumbled state. Just wanted to try it out, even if my assumption that since the model was not trained specifically for product that it would give out something akin to this.

The next step was to upload the design into ChatGPT's model to see the results. Using the free version, the generation process took some time. The output was closer to my initial specifications than Firefly's, as the plugin was specifically designed for product screens. It provided low-fidelity prototypes that included details like buttons and tables. However, the rendering took a few hours due to the queue, which isn't efficient unless using a pro subscription. On ChatGPT, it was offering to translate the whole process into code, which I also tested out (also from previous personal experiments) but not important at this stage.

The next trial was with a Figma plugin called UX Pilot. What it gave was a much more polished and already close to the direction that I want to go for in the next steps of Prototyping. When giving very specific prompts e.g. length/width of sections, generation of placeholders it's simply much better.

It got most of the details from the prompts I gave, and can specify details like width/height and style. However, it only generates screen-per-screen at the moment where you can choose variations. It does not have intelligence to follow through with a flow prompt (as of writing), so I had to run again a few feedback loops on GPT a revised prompting scenario to cover the initial flow bases.

I found the process very interesting as it lays foundations pretty well in neat Figma files, but there are two downsides to it:

1. Cost - Credit-based subscription is expensive and you can only generate a few screens. I subscribed and paid in to the $15 monthly (which gives 70 renders, variations counted) which is not quite a lot.

2. Single screen generation - When I prompted to follow a flow pattern e.g. what happens on the signup/login state, it only gives the first screen. I had to re-engineer this in a way that it gets from end-to-end of the flow, which ate up the render allotment I paid for.

This brief experiment highlighted for me how different GenAI models handle screen rendering and information display. If the prompt is incorrect, the output won't improve, making prompt refinement essential. Take a look at Firefly for example - even if it's a visual-focused multimodal LLM, it's not really the results I wanted (although pretty similar with the others, just less refined).

Overall, I came to a point where I was satisfied with the initial wires, and now preparing to complete the flows going through the next steps.

Next Steps

Going on through the second half,

Closing Thoughts

What I've done so far:

- Selected the project topic and defined market positioning. Also defined the core user personas and mapped out their user journeys

- Identified priorities forthe MVP using the MoSCoW model

- Built user flow diagrams for 6 key screens

- Explored and did a competitor analysis of similar products

- Drafted the information architecture/sitemap

- Created wireframes from hand sketches, evolving them into lo/mid-fi screens

There were definitely some challenges along the way. I encountered moments of hallucination, misplaced geotargeting, and subtle signs of bias in AI outputs. Still, using GenAI tools proved incredibly effective for quickly bringing ideas to the forefront. For designers like me, especially when working with lean teams or limited budgets this approach significantly reduces research time and jumpstarts the creative process. There are still costs to be considered and while I'm a bit short on liquid funds at the moment, taking the courses and paying for extras like plugins, and my creative app subscriptions hurt a bit but I believe it's worth it in the long run.

Hope you stay tuned for the next phase, where I'll test, refine, and bring the product vision closer to MVP - and hopefully I'll have a name for it :)